EU AI Act Summary 2026: What Enterprise Teams Need to Do Now

The European Commission had every opportunity to delay the EU AI Act's high-risk deadline. On 19 November 2025, when it released its Digital Omnibus package, industry lobbyists were hoping Brussels would "stop the clock" and give companies more time to prepare. The Commission did exactly the opposite: it tidied up peripheral provisions, strengthened the EU AI Office, and left the core timeline untouched. The date that matters is 2 August 2026, and that date is now anchored in law.

Most enterprise teams are still treating this the way they treated GDPR in 2017: as a compliance problem they can address later, once the lawyers get loud enough. That playbook will fail here because the EU AI Act operates on fundamentally different logic. GDPR was about behaviour and data handling, and the AI Act is about product safety and market access. If your AI system falls into the high-risk category and you cannot demonstrate conformity by August 2026, you will face a hard barrier that prevents you from placing that system on the EU market at all.

This post explains what the Act actually requires, who it applies to, and what your team should be doing in the next six months.

1. What the EU AI Act actually is

The EU AI Act is a Regulation, not a Directive. A Directive requires each EU member state to pass its own implementing legislation, which creates variation and delay. A Regulation applies directly and uniformly across all 27 member states from the moment it enters into force.

The high-risk provisions derive from the EU's product safety and market surveillance framework, the same framework that governs medical devices and industrial machinery. From the EU's perspective, a high-risk AI system looks less like a cloud service and more like an X-ray machine. The compliance model follows accordingly: you perform a conformity assessment, you draw up an EU Declaration of Conformity, you affix a CE mark, and you maintain a technical file that can withstand regulatory scrutiny. Without these, your product should not be on the market, and market surveillance authorities, customs officials, and your own customers are empowered to block or withdraw it.

Like GDPR, the AI Act applies based on who you affect, not where you are headquartered. If your AI system's output is used in the EU, you are in scope regardless of whether your company has any EU presence.

2. The four gates

The EU AI Act runs four independent checks, and the obligations from each can stack. A single AI system can trigger multiple gates simultaneously.

Gate 1: Prohibited practices (Article 5). Certain AI applications are banned outright. These include social scoring systems, AI that exploits vulnerabilities of specific groups, and real-time remote biometric identification in public spaces (with narrow exceptions). Already enforceable since February 2025.

Gate 2: High-risk systems (Annex III). AI systems used in high-stakes domains trigger the full compliance regime: risk management, technical documentation, conformity assessments, CE marking, and ongoing monitoring. Domains include biometrics, critical infrastructure, employment, credit scoring, law enforcement, and administration of justice. Enforceable August 2026.

Gate 3: Transparency obligations (Article 50). AI systems that interact with people, detect emotions, or generate synthetic content must disclose their nature. If you run a chatbot, you must tell users they are talking to an AI.

Gate 4: General-purpose AI (Chapter V). Providers of foundation models face model-level obligations around transparency and documentation. Enforceable since August 2025.

A credit-scoring chatbot built on a foundation model would trigger Gates 2, 3, and potentially 4. You must satisfy all applicable requirements, not choose among them. For a detailed breakdown of how the gates work, see our EU AI Act compliance guide.

3. Are you actually in scope?

The AI Act's definition is broader than most enterprise teams assume. Article 3 covers any machine-based system that operates with some autonomy and infers how to generate outputs such as predictions, recommendations, or decisions. This captures recommendation engines, fraud detection, automated underwriting, dynamic pricing, predictive maintenance, and countless embedded ML components your engineering team may not even think of as "AI."

The Act distinguishes between providers (who develop or place AI systems on the market) and deployers (who use them). Both have obligations, though provider obligations are more extensive. What catches many companies off guard is that significant modifications can flip your role from deployer to provider. If you retrain a licensed model on new data, alter its algorithms, or integrate it in ways that substantially change its behaviour, you may have become a provider and inherited the full set of provider obligations.

4. What compliance means for high-risk systems

If your AI system falls into a high-risk category, you must satisfy specific operational obligations before placing it on the EU market. The key requirements are:

Risk management (Article 9): A documented system for identifying, analysing, and mitigating risks throughout the AI lifecycle.

Data governance (Article 10): Training, validation, and testing datasets subject to documented governance practices, including bias detection.

Technical documentation (Article 11): Comprehensive documentation of design, development, monitoring, and performance characteristics, kept current and available to authorities.

Record keeping (Article 12): Automatic logging of events throughout the system's lifetime.

Transparency (Article 13): Clear instructions enabling deployers to interpret outputs and use the system appropriately.

Human oversight (Article 14): Design enabling operators to understand capabilities, detect automation bias, and intervene when necessary.

Accuracy, robustness, cybersecurity (Article 15): Documented performance levels and measures against errors, faults, and attacks.

Quality management system (Article 17): Policies and procedures covering regulatory strategy, development, testing, risk management, and incident reporting.

After implementing these, you undergo a conformity assessment (internal or via Notified Body depending on category), sign an EU Declaration of Conformity, affix a CE mark, and register in the EU database. Substantial modifications require repeating the process.

5. What to do in the next six months

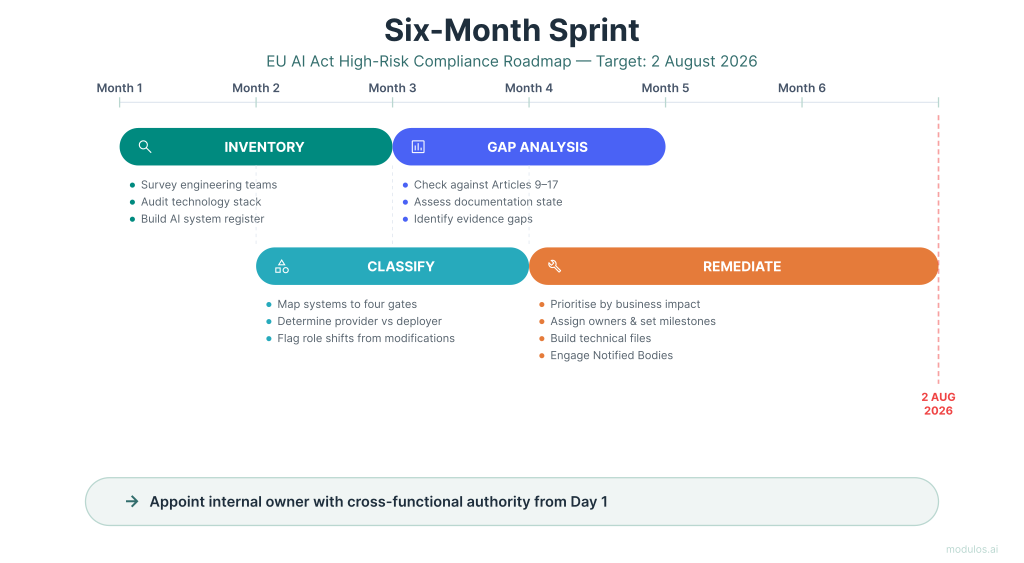

Six months is not long if you are starting from limited visibility and minimal documentation. But it is enough to establish a credible compliance trajectory.

Months 1-2: Inventory. Find every system that meets the Article 3 definition. Survey engineering teams, review procurement records, audit your technology stack. Output: a register of AI systems with metadata on function, ownership, data sources, and deployment.

Months 2-3: Classification. Map each system against the four gates. Determine whether you are provider or deployer for each. Flag any modifications that may have shifted your role.

Months 3-4: Gap analysis. For high-risk systems, assess current state against Article requirements. What documentation exists? What governance is in place? Where are the holes?

Months 4-6: Remediation planning. Prioritise by business impact and gap size. Assign owners. Set milestones. Begin building technical files. Engage Notified Bodies if third-party assessment is required.

Throughout: name an internal owner with cross-functional authority. AI Act compliance touches engineering, legal, risk, product, and operations. Without clear ownership, it will stall.

6. What happens if you ignore this

Fines reach up to €35 million or 7% of global turnover for prohibited practices, and €15 million or 3% for other violations. But fines are not the primary risk.

The more immediate risk is market access. Without conformity assessment and CE marking, your high-risk system cannot legally be placed on the EU market. Customs and market surveillance authorities can block it at the border.

Your EU customers will enforce this before regulators do. Banks, insurers, and industrial companies are themselves deployers of high-risk AI. Their supervisors will ask what systems they use and what compliance evidence exists. The rational response is to push that risk onto vendors through procurement requirements, contract warranties, and audit rights. We are already seeing AI Act clauses in RFPs across Europe. If you cannot tell a credible compliance story, you will lose deals to competitors who can.

Common questions

"We do not sell into the EU directly. Does this apply to us?"

Yes. The Act applies based on where your AI system's output is used. If you license a model to a customer who deploys it in the EU, you are in scope. Your customers will put AI Act obligations into your contracts regardless.

"We are deployers, not providers. Do we have obligations?"

Deployers have real obligations, including fundamental rights impact assessments in some cases and human oversight requirements. More importantly, substantial modifications can flip you into provider status.

"Should we wait for the technical standards to be finalised?"

The requirements are already law. Standards provide presumption of conformity, but waiting for them leaves you scrambling in mid-2026 with no documentation and no processes. Start now and refine as standards emerge.

Getting started

The August 2026 deadline is approaching faster than most organisations realise. Companies that begin preparing now will have a significant advantage over those still hoping the law will be softened or delayed.

Modulos has built an AI governance platform designed to help enterprises navigate these requirements, connecting to your existing repositories and documentation to identify gaps and generate evidence for your technical file.

For a deeper dive into the regulation, see our comprehensive EU AI Act compliance guide.

Ready to Transform Your AI Governance?

Discover how Modulos can help your organization build compliant and trustworthy AI systems.