Understanding and

Implementing the

NIST AI Risk

Management Framework

Discover how integrating the NIST AI Risk Management Framework helps your organization manage AI-related risks and develop responsible AI systems

What is the NIST AI Risk Management Framework (NIST AI RMF)?

The NIST AI Risk Management Framework (NIST AI RMF 1.0) is a set of guidelines created by the National Institute of Standards and Technology (NIST), a U.S. federal agency. While it is primarily intended for use within the United States, the framework has a broader influence and is adopted by organizations and stakeholders worldwide.

It offers a structured way to identify, assess, manage, and monitor risks throughout the AI lifecycle, ensuring the responsible development and deployment of AI systems. In April 2024, NIST also launched a Generative AI (GenAI) evaluation program, further expanding its efforts to ensure safe and responsible AI use across different sectors.

The NIST AI RMF is adaptable to various industries and sectors, addressing the unique challenges posed by AI technologies. It focuses on transparency, accountability, and fairness, guiding organizations to align their AI practices with legal, ethical, and societal standards.

The Core Purpose of the NIST AI RMF

The core purpose of the NIST AI RMF is to provide organizations with a structured approach to managing AI-related risks. It covers key areas of AI risk management, including:

Identifying AI Risks

Spotting potential AI systems problems, such as biases in data, security gaps, and unexpected outcomes.

Assessing AI Risks

Analyzing the impact. Evaluating how serious these risks are and determining which ones need immediate attention.

Managing AI Risks

Taking steps to mitigate or minimize these risks, ensuring AI technologies are used safely and ethically.

Monitoring AI Risks

Keeping an eye on AI systems to detect new risks early, ensure compliance, and maintain system integrity.

Key Components of the NIST AI RMF

Establish Policies and Procedures

Develop and implement clear policies to manage AI risks.

Identify and Document AI Risks

Recognize and document potential risks associated with AI systems.

Develop Metrics to Assess AI Risks

Create both quantitative and qualitative metrics to evaluate AI risks.

Prioritize and Address Identified AI Risks

Rank AI risks based on severity and likelihood, and develop strategies to address them.

Ensure Legal and Regulatory Compliance

Align AI practices with existing laws and regulations.

Understand the Context and Impact

Evaluate the context in which AI systems operate and their potential impact on various stakeholders.

Monitor AI Systems Continuously

Implement ongoing monitoring practices to track the performance and risks of AI systems.

Implement Risk Mitigation Strategies

Apply suitable techniques to reduce the impact of identified risks.

Promote Accountability and Transparency

Define roles and responsibilities to create a culture of accountability.

Involve Diverse Stakeholders

Engage a wide range of stakeholders, including developers, users, and affected communities, in the risk identification process.

Evaluate the Effectiveness of Risk Controls

Regularly assess and adjust risk mitigation measures.

Maintain Continuous Oversight and Improvement

Ensure ongoing oversight and improvement of AI risk management practices.

Why Implement the NIST AI RMF?

Identifying and addressing AI risks early ensures safer and more reliable operations, leading to informed decision-making and a stronger overall resilience.

Better Risk Management

Identifying and addressing AI risks early ensures safer and more reliable operations, leading to informed decision-making and a stronger overall resilience.

Regulatory Compliance

Meeting legal and regulatory requirements reduces legal risks and builds trust with customers, partners, and regulators through increased transparency.

Improved Efficiency

Integrating AI risk management into your existing processes streamlines operations and maintains performance through regular updates.

Competitive Edge

Staying ahead of regulatory changes and demonstrating responsible AI use positions your organization as a leader, driving innovation and setting you apart from competitors.

Ethical AI Practices

Using AI systems ethically aligns with societal values, protects stakeholder interests, and enhances your reputation, supporting long-term success.

Innovation and Growth

Promoting a structured approach to AI risk management fosters an environment of innovation, enabling your organization to explore new opportunities.

Trusted by 200+ organizations

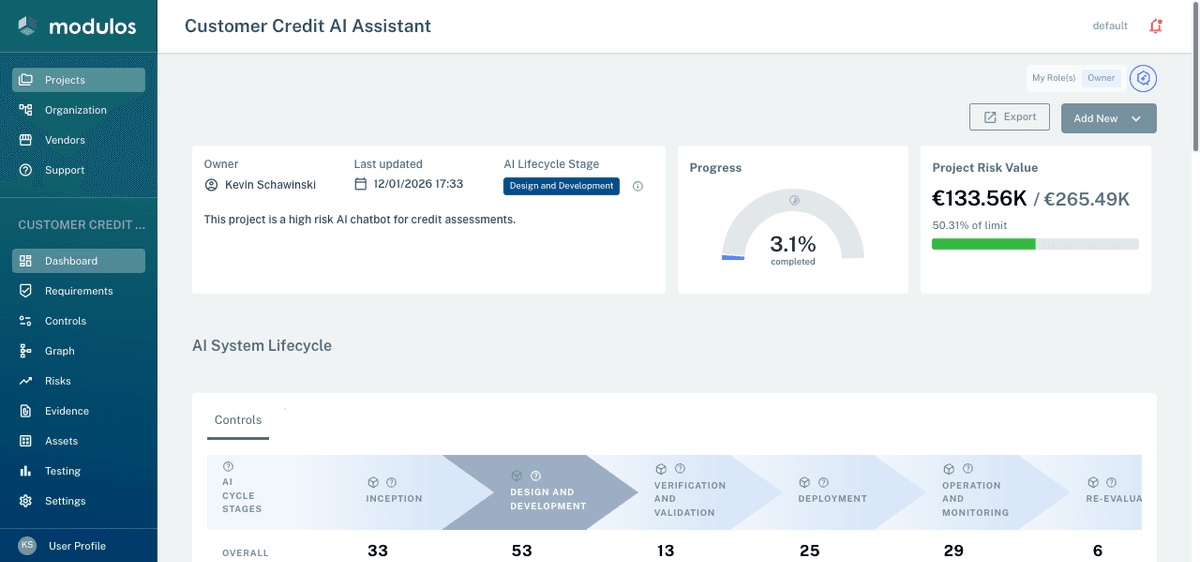

How Modulos Can Help You Implement the NIST AI RMF 1.0?

Modulos is actively involved in advancing AI safety and governance, including joining the Commerce Consortium for AI Safety in collaboration with NIST. We provide clear guidance to meet the NIST AI RMF 1.0 requirements, ensuring your AI systems are safe, efficient, and compliant through centralized evidence collection.

Read more about our involvementReceive clear guidance to meet the NIST AI RMF requirements, ensuring your AI systems are safe, efficient, and compliant through centralized evidence collection.

FAQ about NIST AI RMF

The NIST AI Risk Management Framework (AI RMF) is designed to help organizations manage and mitigate risks associated with artificial intelligence. It provides guidelines to ensure that AI systems are developed and used in a trustworthy, safe, and ethical manner, addressing concerns such as fairness, transparency, and accountability.

The NIST AI RMF is intended for a wide range of organizations, including businesses, government agencies, and academic institutions. Any entity involved in the development, deployment, or use of AI technologies can benefit from implementing this framework to ensure responsible AI practices.

Implementing the NIST AI RMF helps organizations identify, assess, and manage AI-related risks more effectively. It promotes transparency, accountability, and ethical AI use, which can enhance trust with stakeholders, improve regulatory compliance, and reduce the likelihood of adverse outcomes from AI systems.

Involving diverse stakeholders ensures that multiple perspectives are considered when identifying and assessing AI risks. This inclusive approach helps uncover potential biases, ethical concerns, and unintended consequences that might be overlooked by a homogeneous group, leading to more robust and fair AI systems.

Metrics for assessing AI risks can include both quantitative measures (such as error rates, bias scores, and performance benchmarks) and qualitative assessments (such as stakeholder feedback and ethical evaluations). The choice of metrics should align with the specific context and objectives of the AI system being evaluated.

Continuous monitoring allows organizations to detect and respond to emerging risks in real-time. It helps ensure that AI systems remain compliant with regulatory requirements, perform as expected, and do not develop unforeseen issues over time. Regular updates and adjustments based on monitoring data enhance overall system integrity.

The NIST AI RMF is specifically designed to be comprehensive and adaptable across various industries and sectors. While other frameworks may focus on specific aspects of AI risk (such as ethics or security), the NIST AI RMF provides a holistic approach that integrates governance, risk mapping, measurement, and management into a unified framework.

The NIST AI RMF is a voluntary framework focused on risk management best practices, while the EU AI Act is a regulatory requirement with legal obligations. The NIST framework provides guidance for managing AI risks, whereas the EU AI Act mandates specific compliance requirements with potential penalties for non-compliance.

The NIST AI RMF is designed to integrate seamlessly with existing ERM practices. It provides a structured approach to managing AI-specific risks that can be incorporated into broader organizational risk management strategies, ensuring consistency and coherence across all risk management efforts.

Challenges may include the need for specialized expertise in AI risk management, resource constraints, and the complexity of integrating the framework into existing processes. Organizations may also face difficulties in keeping up with rapidly evolving AI technologies and regulatory requirements.

Yes, small businesses can benefit from the NIST AI RMF. The framework is designed to be scalable and adaptable, allowing organizations of all sizes to implement its principles according to their specific needs and resources. Small businesses can use the framework to build trust with customers and partners by demonstrating responsible AI practices.

NIST provides various resources, including detailed documentation, case studies, and toolkits to assist organizations in implementing the AI RMF. Additionally, third-party consultants and software solutions, such as Modulos, can provide guidance and support for effective implementation.

The NIST AI RMF emphasizes the importance of ethical AI development by promoting transparency, accountability, and fairness. It encourages organizations to consider the societal impact of their AI systems and to engage diverse stakeholders in the development process to ensure that ethical concerns are addressed.

Transparency is a core principle of the NIST AI RMF. It involves clear communication about how AI systems are developed, how they make decisions, and how risks are managed. Transparency helps build trust with stakeholders and ensures that AI systems can be scrutinized and held accountable.

Ready to Implement The NIST AI RMF in Your Organization?

Contact Modulos today to learn more about our solutions and how we can support your journey towards responsible AI governance. Our experts are here to help you understand and apply the NIST AI RMF, ensuring your AI systems are trustworthy, safe, and compliant.